7.1. Measurements for medical analysis of speech#

7.1.1. Glottal inverse filtering#

7.1.1.1. Background#

Speech signals can be roughly divided into three main categories according to their production mechanism (see e.g. [Flanagan, 1972] or Section 2.3). These three categories are

voiced sounds (e.g. [a], [n]), which are excited by the airflow signal generated by fluctuation of the vocal folds,

unvoiced sounds (e.g. [s], [f]), for which the sound excitation is turbulent noise, and

plosives (e.g. [k], [p]), which are transient-type sounds generated by abruptly releasing the air flow that has been blocked by the tongue or the lips.

From these three categories, we will focus in this section on voiced sounds. This group of speech sounds has been of special interest in speech research. because voiced sounds are more common than unvoiced sounds in many languages (in English, for example, 78% of speech sounds have been reported to be voiced [Catford, 1977].

Since vocal fold vibration and glottal excitation are key in production of speech, it is important have methodology to measure them. What makes a “good” voice? If the vocal folds are subject to a disruption, how does it affect the voice? This is often considered a medical question, but disruptions of voice have also large social and societal impacts. If your vocal folds do not function well, you cannot produce a proper glottal excitation and you might loose your voice. As a result of this, you can become isolated since you loose your ability to take part in speech communication. If you work in a voice-intensive profession such as in teaching, sales, singing or acting, also your ability to work relies on your voice. Therefore, disturbance in the voice impedes your ability to work. Studying the vocal folds and the glottal excitation it generates is therefore of great importance.

The excitation of voiced speech is the air flow that streams from the lungs and generates oscillations which take place in the mucosa (mucous membrane lining) of the vocal folds. This airflow excitation waveform is formally known as the glottal volume velocity waveform, but it is typically called shortly the glottal excitation. The word glottal comes from the orifice (i.e. opening) between the two vibrating vocal folds, the glottis (Fig. 7.1). The glottal excitation has an important role in speech communication because it is the acoustical source for most of the speech sounds that we use in our daily speech communication. Vibration of the vocal folds is quasi-periodic, that is, almost regular. This vibration results in a quasi-periodic structure of the glottal excitation, which in turn results in a quasi-periodic structure of the generated speech pressure signal. The vibration frequency of the vocal folds determines the fundamental frequency (F0) of the glottal excitation–and the F0 of the generated speech signal as well—and is therefore the source of pitch in speech. The vibration mode of the vocal folds is known as the phonation type which is varied by talkers when they change voice quality of their speech to sound, for example, breathy or pressed. For more information about analysis and utilization of voice source information, the reader is referred to the review article by [Kadiri et al., 2021].

Fig. 7.1 Vocal folds (left), seen from above. The open space between the vocal folds is called the glottis. The glottis is open in the upper panel and closed in the lower panel. The vocal folds are located in the larynx (right).#

The vocal folds are located in the neck, covered and surrounded by cartilages. Direct measurement of the vocal folds is therefore difficult. As an example, visual imaging of the vocal folds by inserting an image sensor into the mouth/nostrils is uncomfortable to say the least. Even when it is possible, it is an invasive measurement impeding normal production of speech and giving measurements a bias of an unknown size. Moreover, since the vocal folds oscillate with a fundamental frequency that can be several hundreds of Hz, high-speed video imaging is needed. As an example, if F0 is equal to 400 Hz, an imaging system with a frame rate of 4000 Hz is needed to get 10 images of the vocal folds per one fundamental cycle. While high-speed imaging systems are today readily available, they need a lot of light, which generates heat, which might damage sensitive tissues in the larynx. Imaging with other methods, X-rays or magnetic resonance imaging, generally have a slower frame rate and some imaging techniques (like X-rays) also generate harmful radiation (especially at high frame rates). The cartilage surrounding the vocal folds also prevents ultrasound measurements.

The most widely used direct measurement of the vocal folds is electroglottography (EGG) (for a review, see [Herbst, 2020]). EGG measures the time-varying impedance of the vocal folds using electrodes that are attached to the skin in the neck. EGG measures conductivity, which is dependent on the contact of the vocal folds when the glottis opens and closes. Thus, EGG gives information about the vocal fold contact area during a glottal cycle. However, this information is usually one-dimensional which limits the usability of the measurement. EGG signals are also sensitive to the placement of the electrodes. EGG signals are also unable to carry useful information in cases when there is no contact of the vocal folds which happens, for example, in production of soft speech.

Even though the direct measurement of the vocal folds is difficult, recording the acoustic output of the voice production mechanism, the speech signal, is easy. With a microphone, we can record the speech pressure signal emitted from the mouth, and try to deduce the glottal excitation from the speech sound. The procedure is minimally invasive, because we do not need to insert any sensors inside or onto the speaker’s body. The airflow through the glottis is closely related to the movements of the vocal folds; when the vocal folds are open, air can flow and when they are closed, airflow is stopped.

When the glottal excitation transmits from the vocal folds through the oral and nasal cavities, it is acoustically shaped by the vocal tract (for details, again, see Speech production and acoustic properties); some frequencies are emphasised and others attenuated. To estimate the glottal excitation, we therefore need to cancel the acoustic effect of the vocal tract. Recall that the effect of the vocal tract can be efficiently modelled by, for example, a linear predictive filter (by assuming that the speech production apparatus is a linear system). We can thus first estimate a filter to model the effect of the vocal tract and then cancel the effect of the vocal tract from speech by filtering the speech signal through the vocal tract inverse filter. This process to estimate the glottal excitation from speech pressure signal recorded by a microphone is known as glottal inverse filtering (GIF) [Alku, 2011].

7.1.1.2. Glottal inverse filtering methods#

The estimation of the glottal excitation based on GIF has been studied since the 1950’s. The early studies used analog antiresonance circuits to cancel the effect of the vocal tract. Since the 1970s, digital signal processing has been used in the development of GIF methods. The developed technologies differ mainly in the way the vocal tract transfer function is estimated. Most methods are based on LP analysis, which assumes that the vocal tract transfer function can be approximated by an all-pole filter. A widely used LP-based GIF method is closed phase (CP) analysis [Wong et al., 1979]. It is based on computing the vocal tract transfer function with LP from speech samples in the closed phase of the glottal cycle when there is excitation through the vocal folds. Another popular GIF method is iterative adaptive inverse filtering (IAIF) [Alku, 1992]. In this method, the average effect of the glottal source on the speech spectrum during the open phase and closed phase of the glottal cycle is first cancelled from speech after which the vocal tract is estimated with LP. Examples of more recent GIF methods based on different variants of LP analysis are the quasi-closed phase (QCP) analysis [Airaksinen et al., 2014] and the quadratic programming (QPR) approach [Airaksinen et al., 2016]. GIF methods have also been developed based on the joint optimization of the source and filter [Fu and Murphy, 2006, Schleusing et al., 2013, Auvinen et al., 2014, Alzamendi and Schlotthauer, 2017]. In these methods, parametric artificial glottal source models are used to represent the glottal flow pulse or its derivative in a parametric form. Another approach for GIF is state-space modelling which is based on a concatenated tube model of the vocal tract and the Liljencrants-Fant model of the source [Sahoo and Routray, 2016]. By optimizing the model using extended Kalman filtering, estimates of the glottal source and intermediate pressure values within the vocal tract are obtained. GIF methods have also been developed using a combination of causal (minimum phase) and anticausal (maximum phase) components of the speech signal [Bozkurt et al., 2005, Drugman et al., 2011, Drugman et al., 2012]. In these methods, the response of the vocal tract and the return phase of the glottal flow are considered as causal signals, and the open phase of the glottal flow is considered as an anticausal signal. These signals are separated by a mixed-phase decomposition using analysis synchronized with the time instants of glottal closure.

7.1.2. Speech-based biomarking of state of health#

The main function of speech is to enable communication between people by transferring linguistic information between speakers. In addition to its linguistic content, the speech signal, however, includes plenty of other information. This information includes paralinguistic issues such as vocal emotions (e.g. angry/sad/happy speech) and speaker traits (e.g. gender, age, height etc. of the speaker). One research topic that belongs to the latter category is biomarking the speaker’s state of health using his or her speech signal. As an example, the goal of biomarking could be to detect from speech whether the speaker has Covid-19 or not. This topic calls for signal processing and machine learning methodologies and has become an area of increasing interest in speech technology. The major issues underpinning this research topic will be shortly described in this section.

7.1.2.1. Benefits of the speech-based biomarking technology#

The speech-based biomarking of state of health should not be seen as a technology to replace the true clinical diagnosis and care of patients. However, the speech-based biomarking of human health has a few benefits, which make it a justified topic of health and wellbeing technology. First, the input signal to the biomarking system, the speech microphone signal, can be recorded non-invasively in a comfortable manner using a cost-effective devise (e.g. phone). Second, the speech-based biomarking can be conducted outside hospital using a system that is easy to administer and can be used by the patient at home, thereby avoiding frequent and often inconvenient visits to the clinic. This is particularly useful, for example, for neurodegenerative diseases such as Parkinson’s disease and Alzheimer’s disease for which the speech-based biomarking can be used, in principle, in the early detection of the disease from telephone speech recordings. Even though the speech-based biomarking does not replace clinical examinations, it can be used in preventive healthcare technology to detect diseases at an early stage and to track physiological changes caused by the disease.

7.1.2.2. Machine learning tasks used in the study area#

The most widely studied task in the topic is the detection task, that is, the binary classification problem in which speakers with a certain disorder (e.g. Parkinson’s disease, Covid-19 etc.) are distinguished automatically from healthy controls based on the recorded speech signals from both classes. In addition to the detection task, some investigations have addressed the biomarking topic from the multiclass classification’s point of view by studying, for example, 4-class classification where patients suffering from three known voice production disorders are classified from healthy talkers [Chui et al., 2020]. The severity assessment of the underlying disorder has also been studies as a regression problem, for example, related to Parkinson’s disease [Bayestehtashk et al., 2015] and as a multi-class classification problem related to dysarthria [Narendra and Alku, 2021]. Some studies have addressed progression of the underlying disorder using longitudinal analysis (e.g. [Arias-Vergara et al., 2018]).

7.1.2.3. Technologies for the speech-based detection of disorders#

Let us next take a closer look at the technologies that have been used in the detection task described above. The technologies developed can be roughly divided into two categories: (1) conventional pipeline systems and (2) modern end-to-end systems.

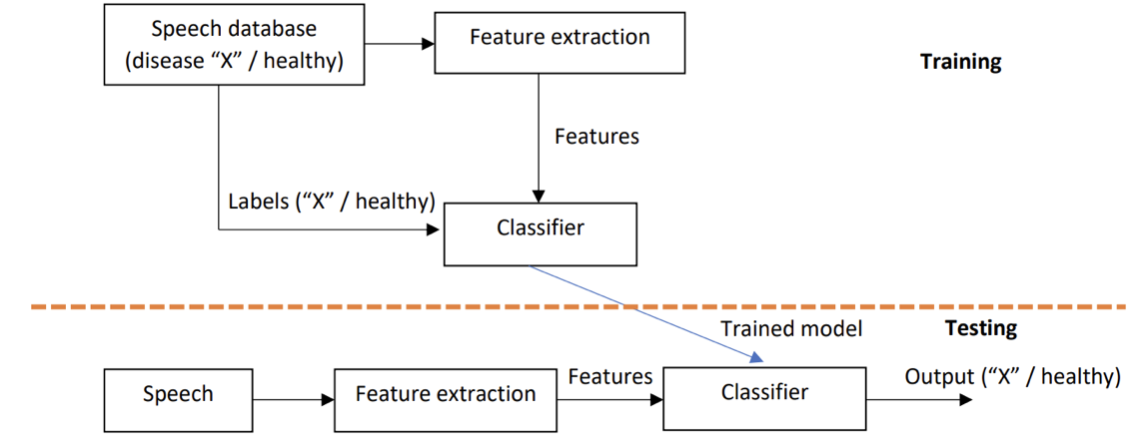

A detection system based on the conventional pipeline architecture consists of two separate parts: the feature extraction stage and the classifier stage (see Fig. 7.2). In the former, the speech signal is expressed using a compressed set of selected acoustical features. In the latter, a machine learning model is used to distinguish speech features between the two classes (disordered vs. healthy). As shown in Fig. 7.2, the detection system is data driven, that is, acoustical features are first extracted from speech signals labelled in a supervised manner (e.g. disordered vs. pathological) to train the classifier. By extracting the same acoustical features from test speech signals, a binary decision (i.e. disordered vs. healthy) can be made by the trained classifier for the input speech signal. A large number of different acoustical features have been used in the detection of disorders from speech. These features include classical, low-dimensional feature extraction methods such as mel-frequency cepstral coefficients (MFCCs) [Davis and Mermelstein, 1980] but also more high-dimensional features (such as openSMILE, [Eyben et al., 2010]) consisting of tens of different individual parameters. In the classifier part of the classical pipeline system, many conventional machine learning classifiers have been used, particularly the support vector machine (SVM) has been widely used [Kadiri and Alku, 2020].

Fig. 7.2 A general structure of a detection system based on the traditional pipeline approach. Speech database includes labelled speech signals (disease “X” vs. healthy). In the upper part, the classifier is trained using the labelled speech signals and selected features. In the lower part, the system is tested for an unseen speech signal. Classifier can be, for example, SVM.#

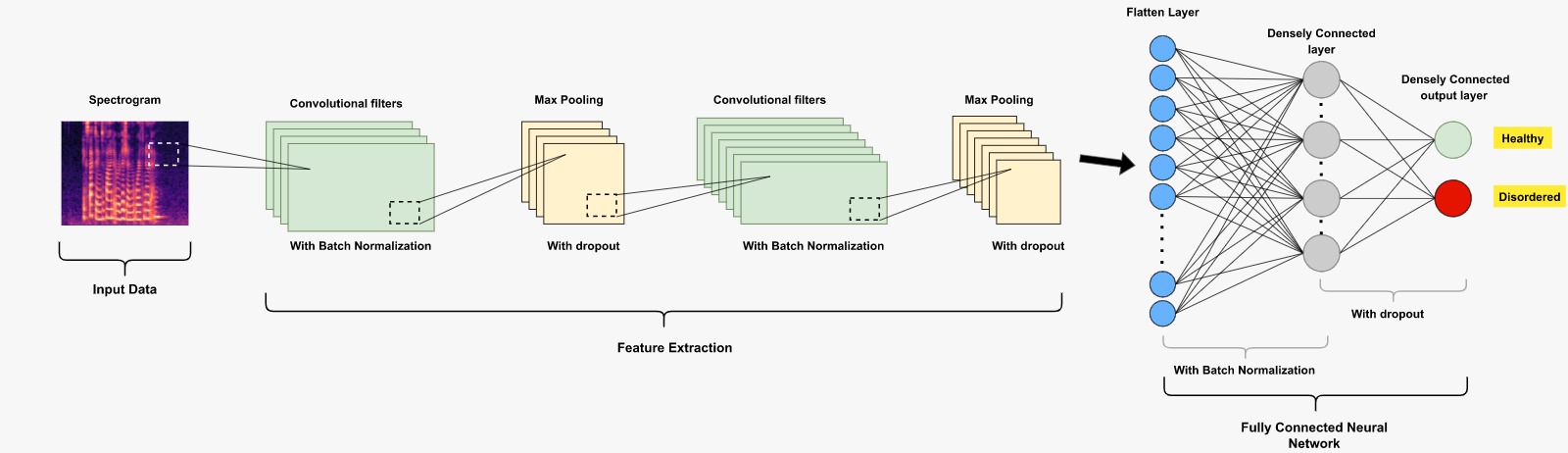

Fig. 7.3 An example of a CNN-based deep learning classification system (healthy vs. disordered).#

Classical pipeline systems have been increasingly replaced recently by end-to-end systems where the speech signal, expressed either as the raw time-domain signal (e.g. [Millet and Zeghidour, 2019]) or as the spectrogram (e.g. [Vásquez-Correa et al., 2017]), is processed directly by deep learning methods to solve the underlying detection task. A block diagram describing an end-to-end system that uses spectrogram as input to the detection network is shown in Fig. 7.3. As the deep learning architecture, most studies have used convolutional neural nets (CNNs). Some studies have also combined classical acoustic features–which are often referred to as hand-crafted features in this context–and deep-learned features (e.g. [He and Cao, 2018]). It is worth noting that deep learning -based end-to-end systems typically call for more training data than classical pipeline systems. Since the data in the topic area is recorded (partly) from patients, whose health condition might not enable long recordings, the amount of training data is typically limited in the study area. Therefore, classical pipeline systems are still a valid choice to build machine learning detection systems.

7.1.2.4. Disorders#

Neurodegenerative diseases, particularly Parkinson’s disease and Alzheimer’s disease, are becoming prevalent globally due to aging of the populations. Parkinson’s disease has particularly been studied in the area of speech-based biomarking of human health (e.g. [Bayestehtashk et al., 2015, Arias-Vergara et al., 2018, Vásquez-Correa et al., 2017]). In addition, neurodegenerative diseases such as Alzheimer’s disease (e.g. [Warnita et al., 2018]) and ALS (e.g. [Norel et al., 2018]) have been investigated in the study area. Other examples of disorders investigated are depression (e.g. [Jiang et al., 2017]), voice production disorders (e.g. [Garc\'ıa et al., 2019]) and sleep apnea (e.g. [Botelho et al., 2019]). After the outbreak of Covid-19, many papers have addressed the detection of it either from speech or from voice signals such as coughs (e.g. [Schuller et al., 2021, Sharma et al., 2022]).

7.1.2.5. Speech databases and speaking tasks#

Studying biomarking of state of health involves using data-driven approaches where network parameters are trained using real speech produced by speakers affected by the underlying health problem. Publicly available databases exist for some diseases such as dysarthria (the TORGO database [Rudzicz et al., 2012] and the UA Speech database [Kim et al., 2008]), voice production disorders (the Saarbrucken voice database, SVD [Pützer and Barry, 2010]), and special language impairment [Grill and Tučková, 2016]. Some of the open databases are, however, fairly small (e.g. with 10-20 speakers each producing a few utterances) which might limit the use of modern data-hungry deep learning networks. Speaking tasks are various, including simple repetitions of words, text reading and spontaneous speech. Some of the speaking tasks have been tailored to be more challenging to produce by the underlying patient population. An example is the diadochokinetic (DDK) task where the speaker is asked to repeat three-syllable units (i.e. /pa/-/ta/-/ka/). The DDK task is widely used in studying Parkinson’s disease [Rusz et al., 2011].

7.1.3. References#

Manu Airaksinen, Tom Bäckström, and Paavo Alku. Quadratic programming approach to glottal inverse filtering by joint norm-1 and norm-2 optimization. IEEE/ACM Trans. Audio Speech Lang. Process., 25(5):929 – 939, 2016. URL: https://doi.org/10.1109/TASLP.2016.2620718.

Manu Airaksinen, Tuomo Raitio, Brad Story, and Paavo Alku. Quasi closed phase glottal inverse filtering analysis with weighted linear prediction. IEEE/ACM Trans. Audio Speech Lang. Process., 22(3):596 – 607, 3 2014. URL: https://doi.org/10.1109/TASLP.2013.2294585.

Paavo Alku. Glottal wave analysis with pitch synchronous iterative adaptive inverse filtering. Speech Communications,, 11(2):109 – 118, 1992. URL: https://doi.org/10.1016/0167-6393(92)90005-R.

Paavo Alku. Glottal inverse filtering analysis of human voice production-a review of estimation and parameterization methods of the glottal excitation and their applications. Sadhana, 36(5):623–650, 2011. URL: https://doi.org/10.1007/s12046-011-0041-5.

Gabriel A Alzamendi and Gastón Schlotthauer. Modeling and joint estimation of glottal source and vocal tract filter by state-space methods. Biomed. Signal Process. Control, 37:5 – 15, 2017. URL: https://doi.org/10.1016/j.bspc.2016.12.022.

Tomas Arias-Vergara, Juan Camilo Vásquez-Correa, Juan Rafael Orozco-Arroyave, and Elmar Nöth. Speaker models for monitoring Parkinson’s disease progression considering different communication channels and acoustic conditions. Speech Communication, 101:11 – 25, 2018. URL: https://doi.org/10.1016/j.specom.2018.05.007.

Harri Auvinen, Tuomo Raitio, Manu Airaksinen, Samuli Siltanen, Brad H. Story, and Paavo Alku. Automatic glottal inverse filtering with the Markov chain Monte Carlo method. Comput. Speech Lang., 28(5):1139 – 1155, 2014. URL: https://doi.org/10.1016/j.csl.2013.09.004.

Alireza Bayestehtashk, Meysam Asgari, Izhak Shafran, and James McNames. Fully automated assessment of the severity of Parkinson's disease from speech. Computer speech & language, 29(1):172 – 185, 2015. URL: https://doi.org/10.1016/j.csl.2013.12.001.

M Catarina Botelho, Isabel Trancoso, Alberto Abad, and Teresa Paiva. Speech as a biomarker for obstructive sleep apnea detection. In International Conference on Acoustics, Speech and Signal Processing (ICASSP), 5851 – 5855. IEEE, 2019. URL: https://doi.org/10.1109/ICASSP.2019.8682431.

Baris Bozkurt, Boris Doval, Christophe d'Alessandro, and Thierry Dutoit. Zeros of z-transform representation with application to source-filter separation in speech. IEEE Sig.Pro. Letters, 12:344 – 347, 2005. URL: https://doi.org/10.1109/LSP.2005.843770.

John Cunnison Catford. Fundamental Problems in Phonetics, chapter one, pages 1–278. Indiana University Press, Bloomington, USA, 1977.

Kwok Tai Chui, Miltiadis D Lytras, and Pandian Vasant. Combined generative adversarial network and fuzzy c-means clustering for multi-class voice disorder detection with an imbalanced dataset. Applied Sciences, 10(13):4571, 2020. URL: https://doi.org/10.3390/app10134571.

Steven Davis and Paul Mermelstein. Comparison of parametric representations for monosyllabic word recognition in continuously spoken sentences. IEEE Transactions on Acoustics, Speech, and Signal Processing, 28(4):357–366, 8 1980. URL: https://doi.org/10.1109/TASSP.1980.1163420.

Thomas Drugman, Baris Bozkurt, and Thierry Dutoit. Causal-anticausal decomposition of speech using complex cepstrum for glottal source estimation. Speech Communication, 53:855 – 866, 2011. URL: https://doi.org/10.1016/j.specom.2011.02.004.

Thomas Drugman, Baris Bozkurt, and Thierry Dutoit. A comparative study of glottal source estimation techniques. Computer Speech and Language, 26:20 – 34, 2012. URL: https://doi.org/10.1016/j.csl.2011.03.003.

Florian Eyben, Martin Wöllmer, and Björn Schuller. Opensmile: the munich versatile and fast open-source audio feature extractor. In Proceedings of the 18th ACM international conference on Multimedia, 1459 – 1462. 2010. URL: https://doi.org/10.1145/1873951.1874246.

James L. Flanagan. Speech Analysis Synthesis and Perception. Springer-Verlag, 2nd edition, 1972. URL: https://doi.org/10.1007/978-3-662-00849-2.

Qiang Fu and Peter Murphy. Robust glottal source estimation based on joint source-filter model optimization. IEEE Trans. on Audio Speech and Language Processing, 14:492 – 501, 2006. URL: https://doi.org/10.1109/TSA.2005.857807.

Jorge Andrés Gómez Garc\'ıa, Laureano Moro-Velázquez, and Juan Ignacio Godino-Llorente. On the design of automatic voice condition analysis systems. \uppercase Part II: review of speaker recognition techniques and study on the effects of different variability factors. Biomed. Signal Process. Control, 48:128 – 143, 2019. URL: https://doi.org/10.1016/j.bspc.2018.09.003.

Pavel Grill and Jana Tučková. Speech databases of typical children and children with SLI. PLoS ONE, 11(3):e0150365, 2016. URL: https://doi.org/10.1371/journal.pone.0150365.

Lang He and Cui Cao. Automated depression analysis using convolutional neural networks from speech. Journal of biomedical informatics, 83:103 – 111, 2018. URL: https://doi.org/10.1016/j.jbi.2018.05.007.

Christian T Herbst. Electroglottography – an update. J. Voice, 34(4):503 – 526, 2020. URL: https://doi.org/10.1016/j.jvoice.2018.12.014.

Haihua Jiang, Bin Hu, Zhenyu Liu, Lihua Yan, Tianyang Wang, Fei Liu, Huanyu Kang, and Xiaoyu Li. Investigation of different speech types and emotions for detecting depression using different classifiers. Speech Communication, 90:39 – 46, 2017. URL: https://doi.org/10.1016/j.specom.2017.04.001.

Sudarsana Reddy Kadiri, Paavo Alku, and B. Yegnanarayana. Extraction and utilization of excitation information of speech: a review. Proceedings of the IEEE, 109(12):1920–1941, 2021. URL: https://doi.org/10.1109/JPROC.2021.3126493.

Heejin Kim, Mark Hasegawa-Johnson, Adrienne Perlman, Jon Gunderson, Thomas S Huang, Kenneth Watkin, and Simone Frame. Dysarthric speech database for universal access research. In Proc. INTERSPEECH, 1741 – 1744. 2008.

Juliette Millet and Neil Zeghidour. Learning to detect dysarthria from raw speech. In International Conference on Acoustics, Speech and Signal Processing (ICASSP), 5831 – 5835. 2019. URL: https://doi.org/10.1109/ICASSP.2019.8682324.

Prabhakera Narendra and Paavo Alku. Automatic assessment of intelligibility in speakers with dysarthria from coded telephone speech using glottal features. Comput. Speech Lang., 65:101117, 2021. URL: https://doi.org/10.1016/j.csl.2020.101117.

Raquel Norel, Mary Pietrowicz, Carla Agurto, Shay Rishoni, and Guillermo Cecchi. Detection of Amyotrophic Lateral Sclerosis (ALS) via Acoustic Analysis. Proc. Interspeech, pages 377 – 381, 2018. URL: https://doi.org/10.21437/Interspeech.2018-2389.

Manfred Pützer and William J. Barry. Saarbrücken voice database, institute of phonetics, univ. of saarland. 2010. http://www.stimmdatenbank.coli.uni-saarland.de/.

Frank Rudzicz, Aravind Kumar Namasivayam, and Talya Wolff. The TORGO database of acoustic and articulatory speech from speakers with dysarthria. Language Resources and Evaluation, 46(4):523 – 541, 2012. URL: https://doi.org/10.1007/s10579-011-9145-0.

Jan Rusz, Roman Cmejla, Hana Ruzickova, and Evzen Ruzicka. Quantitative acoustic measurements for characterization of speech and voice disorders in early untreated Parkinson’s disease. The journal of the Acoustical Society of America, 129(1):350 – 367, 2011. URL: https://doi.org/10.1121/1.3514381.

Subhasmita Sahoo and Aurobinda Routray. A novel method of glottal inverse filtering. IEEE/ACM Trans. Audio Speech Lang. Process., 24(7):1230 – 1241, 2016. URL: https://doi.org/10.1109/TASLP.2016.2551864.

Olaf Schleusing, Tomi Kinnunen, Brad H. Story, and Jean-Marc Vesin. Joint source-filter optimization for accurate vocal tract estimation using differential evolution. IEEE Trans. Audio Speech Lang. Process., 21(8):1560 – 1572, 2013. URL: https://doi.org/10.1109/TASL.2013.2255275.

Björn W Schuller, Anton Batliner, Christian Bergler, Cecilia Mascolo, Jing Han, Iulia Lefter, Heysem Kaya, Shahin Amiriparian, Alice Baird, Lukas Stappen, and others. The INTERSPEECH 2021 computational paralinguistics challenge: COVID-19 cough, COVID-19 speech, escalation & primates. Proc. INTERSPEECH, pages 431 – 435, 2021. URL: https://doi.org/10.21437/Interspeech.2021-19.

Neeraj Kumar Sharma, Ananya Muguli, Prashant Krishnan, Rohit Kumar, Srikanth Raj Chetupalli, and Sriram Ganapathy. Towards sound based testing of COVID-19 – summary of the first diagnostics of COVID-19 using acoustics (DiCOVA) challenge. Computer Speech & Language, 73:101320, 2022. URL: https://doi.org/10.1016/j.csl.2021.101320.

J. C. Vásquez-Correa, J. R. Orozco-Arroyave, and E. Nöth. Convolutional neural network to model articulation impairments in patients with Parkinson's disease. In Proc. Interspeech, 314 – 318. 2017. URL: https://doi.org/10.21437/Interspeech.2017-1078.

Tifani Warnita, Tifani Warnita, Nakamasa Inoue, and Koichi Shinoda. Detecting Alzheimer's disease using gated convolutional neural network from audio data. Proc. Interspeech, pages 1706 – 1710, 2018. URL: https://doi.org/10.21437/Interspeech.2018-1713.

David Y. Wong, John D. Markel, and Jr. Augustine H. Gray. Least squares glottal inverse filtering from the acoustic speech waveform. IEEE Trans. Acoustics Speech Signal Process., 27(4):350–355, August 1979. URL: https://doi.org/10.1109/TASSP.1979.1163260.

Sudarsana R. Kadiri and Paavo Alku. Analysis and detection of pathological voice using glottal source features. IEEE J. Sel. Top. Signal Process., 14(2):367–379, 2020. URL: https://doi.org/10.1109/JSTSP.2019.2957988.